Hardware

I spent the long President’s Day weekend to set up my home server. I got a mini pc with Ryzen 5560U, and added a spare RAM stick and SSD I bought a while ago. In hindsight, the CPU is overkill as I could get away with Intel N100 which is cheaper.

Ubuntu

Initially, I installed Ubuntu Sever 22.04 LTS on the machine. Copying over the compose.yaml file from my Windows laptop, then running in the new server, the process was painless. I got all my apps working in no time without running into any problem.

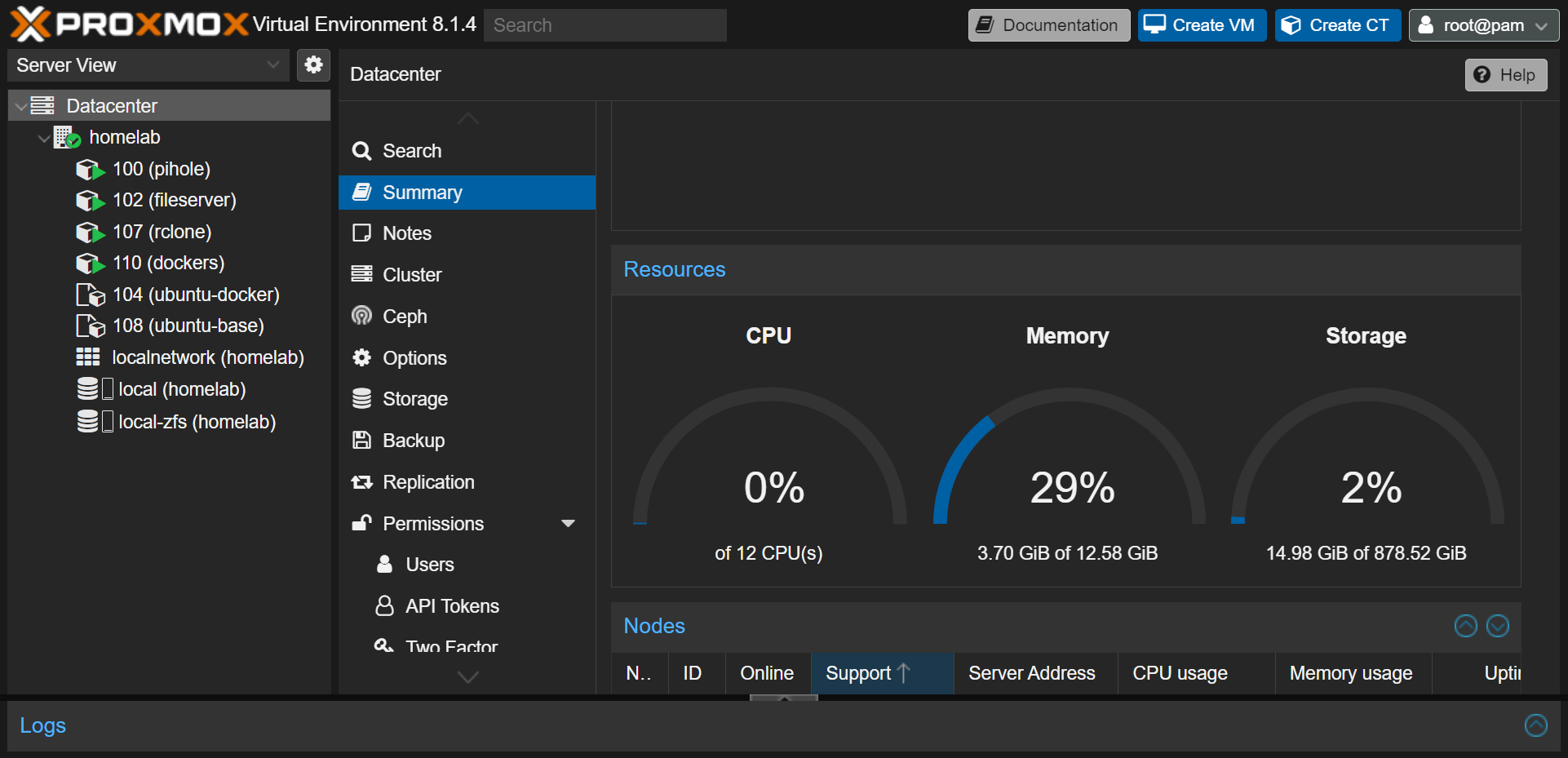

Proxmox.

Installing Ubuntu server was too easy, so I decided to try out Proxmox. My original idea was to install ProxMox, then create an Ubuntu VM to run Docker. Basically it was almost like the same setup as Ubuntu server on bare metal, but I had more flexibility for future expansion (creating new VMs for testing stuff).

WLAN

WLAN isn’t optimal for Proxmox, so I moved my server box from the closet to be right next to the router.

Multiple Reinstall

During installation, I selected RAID 0 to create 1 big ZFS pool from 2 drives. A few hours later, I realized I should have picked RAID 1 for mirror instead of striping.

I installed Samba inside a LXC container. I added SMB server to Proxmox storage, then attached it as a mount point to another LXC container. Resizing the mount point multiple times to make it bigger than SMB LXC broke the system. Even after restarting, it didn’t come up.

A good thing from these errors was finding out about Proxmox post install script to remove the nagging subscription notice.

Shared Folders

The internet told me that I could run Docker inside LXC instead of VM for better performance. I created a few unprivileged LXC containers for testing, and realized that I need to share files between them. NFS was a suggested solution. I tried, but couldn’t get it to work inside an unprivileged container.

After more googling, I found out about LXC bind mount, which was relatively easier to set up. The host and LXC containers are in the same machine, so this works.

First I created a new user in host with uid 1000, guid 1000, then added these lines to /etc/pve/lxc/101.conf:

lxc.idmap: u 0 100000 1000

lxc.idmap: g 0 100000 1000

lxc.idmap: u 1000 1000 1

lxc.idmap: g 1000 1000 1

lxc.idmap: u 1001 101001 64535

lxc.idmap: g 1001 101001 64535

I added a line in /etc/subguid and /etc/subuid:

root:1000:1

Logging into LXC container, I created the same user with uid 1000, guid 1000. Once done, this user will have the same mapped uid and guid in both systems.

Then I added another line to /etc/pve/lxc/101.conf:

lxc.mount.entry: /rpool/abc mnt/abc none bind,rw 0 0

/rpool/abc is a ZFS dataset inside host, and mnt/abc is a directory inside LXC container. These are both owned by the new user. Restarting LXC container and everything works fine with the correct permissions.

Afterward, I converted the container to a template.

Current setup

I’m only using LXC containers. One container for running Docker compose and the others are apps which I found easier to install directly. All my docker compose files are here.

Pi-Hole

Initially, I used my router as a DHCP server, but it didn’t support resolving LAN hostname. I tried ping random-lan-hostname and it didn’t work.

I set up Pi-Hole to block ads and act as a DHCP server. Adding Pi-Hole DNS to my router was a learning lesson. My router bricked a couple of times because I forgot to turn off DHCP server inside my router, I used the wrong LAN subnet mask…

If Pi-Hole went offline, I would lose my internet access. For now, I’m using Cloudflare DNS as 2nd DNS. A future project is to get another mini pc to run 2nd Pi-Hole for high availability (HA). Need to look into gravity sync for syncing 2 instances.

Cloudflared Tunnel

Tunnels are used to expose some of my internal apps to public, so I don’t need to vpn to access them. They are protected behind Cloudflare Access.

Aggregate logs

It took time to ssh into each box and debug Docker containers.

So I’m using ETK stack to aggregate and analyze logs. I use Fluentd logging driver to send logs over the network to Fluentd collector, which then sends them to Elasticsearch, and Kibana is used to visualize them.

I limit Elasticsearch memory to 1GB and it is still working well with my current set up.

SAMBA

I use Samba to share files between VMs.

WIP

I’m planning to create a single node k3s cluster.

Packer

It took some trials and errors but I got the cloud config file to work with Packer Proxmox plugin. My template to create Ubuntu 22.04 with k3s: GitHub.

Run 1 app

Test run 1 app inside cluster and see if I can access it from outside.